By Reginald T. Welles

Special to Police1

Mention “applied physics” in an academy classroom and watch the eyes glaze, the angst build, and the room empty. Mention “high performance driving” and you get wrapped attention. So what’s the difference, other than the name and associated discomfort that the word physics can create? The practitioners of this science are the Emergency Vehicle Operations (EVO) driver training instructors. They are the main conduit for translating vehicle dynamics into practical lessons and procedures that teach students how to control their vehicle. Control is the most critical of vehicle handling skills and requires a working knowledge of vehicle dynamics. Teaching EVO demands an in-depth combination of skill, experience, and knowledge. These attributes set the EVO instructor apart from his training colleagues as a “bred for the track” specialist.

Driving simulation has become an increasingly important aspect of officer training – there are many factors that make a simulator behave like a real vehicle. (Photo courtesy of DriveSafety) |

Adopting the driving simulator

As a specialist in vehicle handling, EVO instructors are often asked to evaluate driving simulators for inclusion in their organization’s training regimen. How this task is accomplished often reflects the instructor’s simulator experience combined with the training plan’s defined expectation of simulator performance (more on this training plan later). In other words, evaluate the simulator’s vehicle dynamics model. It is a daunting task, as simulator vehicle dynamics is one of the least understood and yet fundamental components that defines a simulator’s behavior. For EVO trainers to be effective in this role, a working knowledge of vehicle dynamics is essential because, the way in which the simulator behaves can affect the student’s performance. This article provides a brief glimpse into the application issues to be considered in simulator-based driver training from the perspective of the vehicle dynamics model. The operative word here is brief.

The basic role of a vehicle dynamics model

The performance of a simulated patrol car, fire engine, or ambulance is defined by its vehicle dynamics model. To an EVO trainer, that simple statement should have the same effect that “I only had one beer” has on the officer on patrol. It is suspiciously simple and needs clarification and definition. From the engineer’s perspective, performance means “how close can the simulator match the real vehicle?” From the trainer’s perspective, performance involves two distinctive characteristics: feel and behavior. It is this unique quality of how the simulator feels to the driver (steering feedback, ABS vibration, motion cueing) and how the simulator behaves in a scenario (lateral acceleration around curves, braking, tire-to-road surface interaction, under and over steering) based on driver inputs that is defined by the vehicle dynamics model. Each manufacturer’s simulator has its own signature for feel and behavior. Trainers develop preferences and impressions based on these qualities. So where do these properties come from?

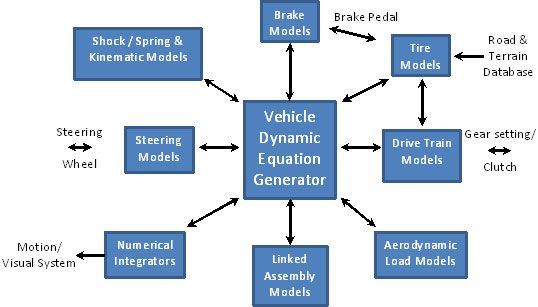

Feel and behavior originates with the vehicle dynamics model’s core algorithms, equations of motion, kinematic constraints (that’s engineering talk for the range of a subsystem’s dimensional performance like a shocks extension or compression distance), data flow constructs (more techno-babble defining software information and how it is processed and passed on to the next point) and data files that establish performance boundaries and behavior properties to drive most of the other subsystems and create cues for feedback in order to establish a believable replication of the actual vehicle.

Vehicle dynamics is very much like an iceberg, what you see is only 1/8th of what you get. The properties of feel and behavior equate to that 1/8th and because they are the most visible properties are often used to decide a purchase. Let’s take a quick look under the surface at the less discussed 7/8ths that affect your simulator’s performance.

Designing to purpose – its all about training

Trick question: when a student straps on a simulator, what can he expect? Students generally expect what they are told to expect by the trainers. The question should be what do they get? That’s where the other 7/8ths comes in.

The accuracy with which the simulator replicates the real vehicle is often referred to as Performance Fidelity. That is to say, how real does it feel? In the training environment this is called Face Validity. While this measure of accuracy is important to enable a training process, it is not sufficient in itself to establish the effectiveness of that training. Effectiveness requires establishing and measuring additional values, briefly described below.

At this point, let’s digress for a moment and review what is often encountered when evaluating a driving simulator. With the simulator on, up and running we are ready to test out these equations and lines of code. The first step is to drive it. As you drive the simulator your colleagues ask how it feels. If the simulator provides the performance expected, you put it through its paces. This whole process is addressing fidelity; referring to how well the simulator matches the actual vehicle’s behavior and performance. It is a highly sensitive cost issue because increasing fidelity demands greater detail in the simulation, which increases the cost.

Simplified view of a vehicle dynamics model and its interconnectivity with functional relationships. Simply put, it is not just an equation, it’s a tuned system. |

In the training world (where simulators are used as part of a training regimen), the principle need is for validity in the affects that the training experience has upon the trainee. These effects may be measured by many factors, including the student’s acceptance and the transfer of behaviors from the training to the real world. This type of validity establishes the real value of the training. Yet the cost of the simulation primarily depends upon its fidelity. These two issues are clearly different. Just how different is a critical point for the trainer to understand; especially when shopping for a simulator. Now, as you drive the simulator, your colleague asks, “did you learn anything”.

Unfortunately, some simulator hardware manufacturers have used the terms “fidelity” and “validity” interchangeably, thereby appearing to equate them. That confusion may promote the sale of simulators, but the key to an effective training system design requires clear distinction between these two different issues:

1. Face Validity (referring to simulator fidelity) and

2. Construct Validity (referring to training effectiveness).

“Face Validity” can be measured quantitatively as the degree of replication provided by the simulator hardware in relation to the real world vehicle. In other words, how real is it? This can be measured by comparing test track data from real vehicles with data captured in the simulator while performing the same maneuvers. Keep in mind that only the data and formulas have been compared. Complete face validity will require sensory feedback cues that are tuned to human sensory thresholds. This is getting the right math and formulas to feel correct after it goes through the computer, pedals, steering system, image system and the complete array of synthetic devices often referred to as Force Control Units (FCU) which provide direct stimuli to the driver.

For the trainer, face validity manifests itself as feel and behavior, the properties traditionally most sought after in comparing simulators to the real vehicle.

The subject of construct validity requires its own paper and is mentioned here to expand the driver trainer’s awareness of how important it can be. That fact established, it should be noted that “Construct Validity” is harder to prove as it requires a training system designed to do it. However, it can be measured quantitatively as the degree of replication provided by the simulated environment (training scenario) in relation to the real world environment. This can be measured by comparing driver performance on the test track in a real car to the driver’s performance in the simulated environment. The observed and recorded driver behavior measured in reduced errors is the metric called transfer of training. In other words, it is the measure of how well the simulated training environment can substitute for a real world (actual in-car) training environment causing positive driver behavior changes (learning).

How a simulator’s mission sets the performance criteria for the vehicle dynamics

Many things affect a real vehicle’s motion properties. What affects the real vehicle will also affect the simulator model. How real must a simulator behave depends on the objective of the training. Or more to the point, what training result you are trying to achieve. This is a subtle way of introducing the difference between a simulator and a training tool. The training tool is the simulator defined to meet the objectives of your training plan. Straight out of the box, a simulator should have its performance base-lined to demonstrate that its required fidelity was delivered. Additionally, audit all software and test its functionality and review accompanying documentation. If you paid for training, record the training session (if allowed) for review later when your team needs a refresher or for new personnel.

That training plan is a non-trivial document. It should be created either by or for the training agency and used to define the simulator performance criteria and required training application software. For example; a plan could identify where in the EVO course the simulator is used and how it is used; details on the training regimen, student use, expected results, policy training, how driver performance is documented, passing scores, what is recorded and how its return on investment (ROI) will be measured. This training plan can be used as a request for proposal or to have the factory comment on their simulator’s ability to comply with your expectations.

Keep in mind that the ability to achieve complex training tasks is what makes a vehicle dynamics model complex. Training usually requires flexibility. Flexibility requires not only modularity allowing interaction, but also adjustment and replacement of subsystem models (tires, drive train and power distribution, instruments, control devices, steering linkage, compliances, suspension configuration, shocks, struts, springs, brakes, compensation systems and articulations). Training objectives can dictate additional complexity by increasing update rates or requiring accurate tire-to-road surface behavior; all of which can impact costs. The training environment impacts the vehicle dynamics model, as the model must recognize environmental details and react to them correctly on the fly. Weight distribution, speed, orientation, road conditions, standing water, snow and dirt depth, weather conditions and wind are some of the factors that affect how vehicles travel down the roadway.

If the dynamics model doesn’t have the ability to recognize and interact correctly with all the key environment variables, it will likely induce inaccurate cues; and, depending on how the simulator is used, the inaccurate cues will significantly erode Construct Validity. That could even cause negative training. This underscores the importance of matching the vehicle dynamics model to the intended purpose of the simulator. If the main purpose is training, the design of the vehicle dynamics must carefully correlate with the operational demands of the training scenarios in order to achieve maximum training value.

Providing human sensory cueing

In addition to providing an accurate mathematical representation of the vehicle and its ability to react to its environment, the vehicle dynamics package must trigger events, control and compensate for system lags, avoid introducing false or exaggerated behavior, and assure that all motion or virtual movement cues created by various FCU devices (steering wheels, pedals, gauges, switches) are correlated in phase within the bounds of human sensory thresholds. In other words, it has to feel right. Typically, the simulator depends upon the dynamics model to initiate forces and cues that are in response to driver inputs. In return, the dynamics model must present the expected or minimally defined stimuli to the drivers’ suite of senses via the simulator’s subsystems. Driver sensory inputs include: visual, proprioceptive (the sense that indicates whether the body is moving), tactile (touch), haptic (how one feels inside – visceral), and auditory stimuli.

Sensory cueing must be correlated by the integration of motion systems, tactile transducers, visual systems, steering force feedback, drive train sound systems and pedals that provide tactile pressures and ABS vibrations,. The critical thing to keep in mind is that all of these must function together through a continuous range of variations that extend throughout the application environment without going unstable or producing any perceptible erroneous behavior. That type of performance maximizes Construct Validity.

Conclusion – Fidelity doesn’t always equate with value

Now you know why the Titanic sunk. It’s that hidden 7/8ths that can really have an impact on your simulator’s performance. Vehicle dynamics may be the core element that dictates how a vehicle behaves. However, in a simulator, vehicle dynamics is more than just a physics model. It is the relationship, affect, and interaction on the synthetic environment, packaged and tuned to meet human expectations. Those expectations include having the simulator perform as an effective part of your training regimen. When you get right down to it; if the simulator has little to no training value, who cares how real it feels. This article should leave you with at least three impressions. 1) A strong sense of relief that you are not an engineer, 2) An appreciation for all of the issues that go into providing fidelity in a simulator, and 3) an understanding that regardless of the simulator’s fidelity or your math scores on the SAT exam, it’s the EVO trainer and the training cadre – not the manufacturer – who are held accountable to make a simulator an effective training tool.

Reginald T. Welles, President & CEO – Applied Simulation Technologies (AST)

Since the mid 1980s Reg has been at the forefront of high performance driving simulator development. He was the program manager for Evans’s & Sutherland (E&S) driving simulation group and developed simulation technology for Daimler-Benz, BMW, Toyota, Nissan, GM, Freightliner, Volvo, Fiat, as well as many universities and research facilities.

In 1990, Reg and his partner Darrell Turpin started I-Sim Corporation to adapt the latest engineering simulator technology for training applications. He established technology agreements with Eaton Corporation to commercialize the Transmission Shifter Simulator; and Goodyear to design real-time tire data integration techniques. While at I-Sim, the PatrolSim & TranSim series of simulators were designed and built. I-Sim also provided technical advise as well as participated in the Federal DOT’s National Advanced Driving Simulation (NADS) program at the University of Iowa.

Past responsibilities include Technical Advisors to the National Teen Research Center and Technical Adivisor for the 21st Century Teen Driving Program for the Joshua Brown Foundation. Present assignments include Technical Advisor for Career Path Training Schools; Technical Advisor for the Teamster Training and Education Fund; and Technical advisor for the National Driver Training Center in Georgia sponsored by the Georgia chapter of the National Safety Council (The center’s mission is to provide comprehensive pre and post-licensure research and evaluation on programs focusing on high risk drivers.)

Reg and his partner Darrell started Applied Simulation Technologies (AST) to focus on providing simulation users with technology, software and insight into the best designs and use of simulation systems for training. AST provides instructional system design and functional applications expertise to the commercial, law enforcement and government communities. Reg’s team has successfully completed three years of in-the-field evaluation and validation of the first, simulator-based, objective driver training performance measurement software package for emergency driver operations. Results have shown consistent improvement in driver performance of over 50%.

Reg has published over 20 papers on driving simulation applications and has over 33 years of engineering, technical & corporate management experience, with special emphasis in real-time driving simulation systems, 3D computer graphics, immersive/interactive training environments and satellite tracking and communications systems. He has a BS in Aeronautical Engineering from San Jose State University, an MBA, and is a former Army Officer.